My Answer to: "Why do YOU hate AI?"

I don’t.

I hate how it is used by some people who just copy-paste the answer from the AI without any cross-checking, testing, or research and then arrogantly defend it in discussions like they know what they are talking about.

Introduction

Sadly, I had an opportunity to participate in a few arguments where I was “fighting against the AI answers and propositions” and not the person’s knowledge or experience. The funny thing is that I was mostly right in those situations mentioned. Even if I missed some details, I was absolutely right regarding the proposed infrastructure, system capabilities, and the way the system should work. How is that even remotely possible?

"For those who understand, no explanation is needed. For those who do not understand, no explanation is possible."

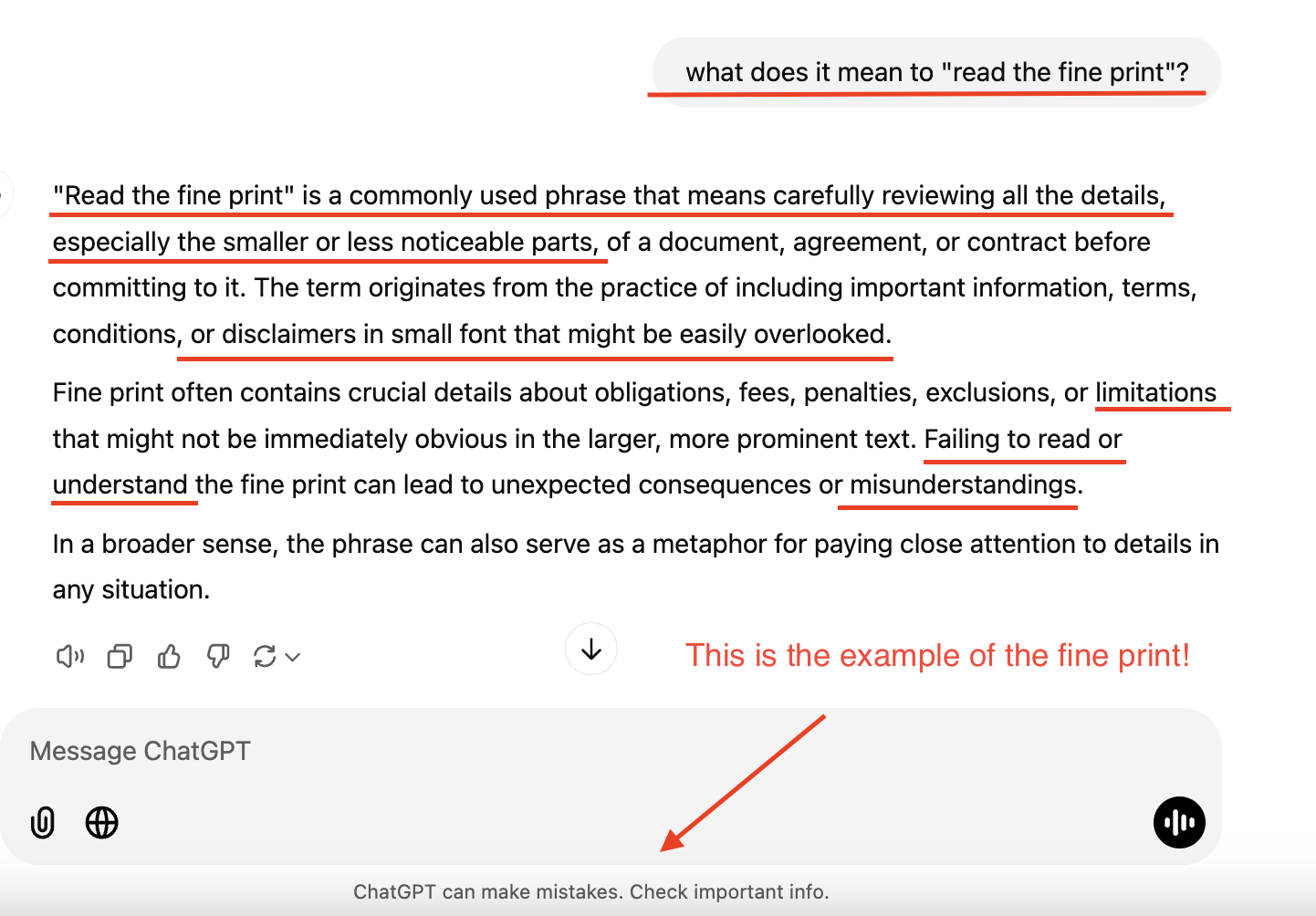

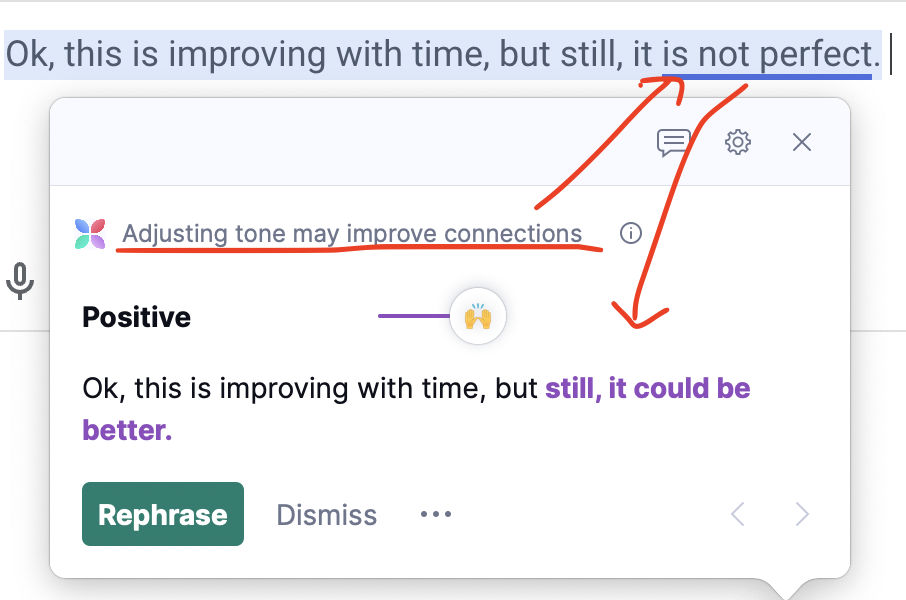

The AI is trained using the data available at that point in time. Data preparation and training itself takes several months while collecting data can take even more time, meaning that the most recent document might not be available. Ok, this is improving with time, but still, it is not perfect.

"Fine print is proof that some people’s full-time job is making your life harder."

The Problem!

The problem is when AI is not being used as a tool but blindly followed as a single source of truth. Those who take for granted whichever output is printed out to their cleverly written prompt are nuss product of the hype. I have a problem with that, and I know that I am not the only one. Are we a minority or not? It’s debatable, but there is a decent number of people who are directly affected by the outbreak (yes, pun is intended) of GPT experts.

Whoever works on designing modern software architectures understands that even though some standard patterns and best practices exist, there is no such thing as a “one-size-fits-all” solution. This is where the “human touch” is very important. The human solution architect will know all the nuances of the system, the business requirements, the budget, real-world issues, and where to make trade-offs, and will be able to decide what best fits the use case. When it comes to AI, we will lose more time generating prompts to describe the current architecture, with the risk of getting vague answers, than actually coming up with the solution based on expertise and experience. I am not saying that AI cannot help during the process of design, but it should NEVER be the source of truth. I don’t know what the future will look like, but AI cannot replace humans in this case, at least not yet.

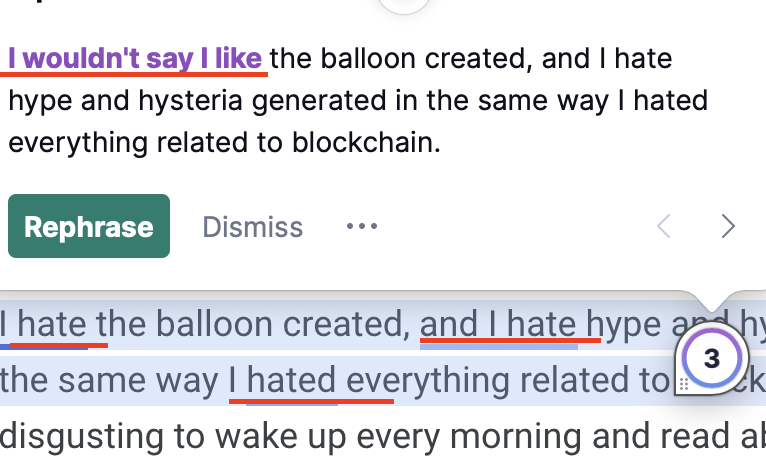

I hate the balloon created, and I hate hype and hysteria generated in the same way I hated everything related to blockchain. It was disgusting to wake up every morning and read about a new blockchain startup that would “disrupt” the industry. The hype was so big that it was pushed everywhere where it didn’t belong. The same is happening with AI.

The Tools

From sharp stones to heavy machinery, all the way to the computer, we are using and improving tools to make our lives easier. I like the GitHub name for the “CoPilot.” That is precisely what it is. YOU are the pilot, YOU are in control, YOU are responsible for the code or architecture or any decision made as a product of using those tools. In order to use the tool, you need to have solid knowledge and experience in the area you are working on. Would you allow your dentist to do the procedure on your teeth just by following the answers from the AI? The question is rethorical.

Am I using AI? Yes, I am using CoPilot mostly and Grammarly sometimes. I tested ChatGPT extensively on a few occasions, both present and past. When I want to understand some term or to learn about a specific topic. I tried a lot of things, from writing songs and guitar chords to fishing, dog breeding, programming, and system architecting, but I did not always get very reliable results.

This is my opinion on how everyone should approach any topic they want to discuss. Try to learn as much as you can, ask questions, and be open-minded. I didn’t just say, “I hate AI.” there are layers within that hate.

I am not pretending to be a power AI user, I am not. I am using it as a tool in the best way I can with the use of common sense and a critical approach. The bottom line is that if I am “discussing” with the ChatGPT and not YOU, then YOU are not needed. ChatGPT (or any other AI tool) is not ready to replace the live human expert, meaning I will look for another person to take your place. If I want to get something from the engine, I can type the prompt and read the answer by myself.

To show that Let’s go through some negative examples.

Examples

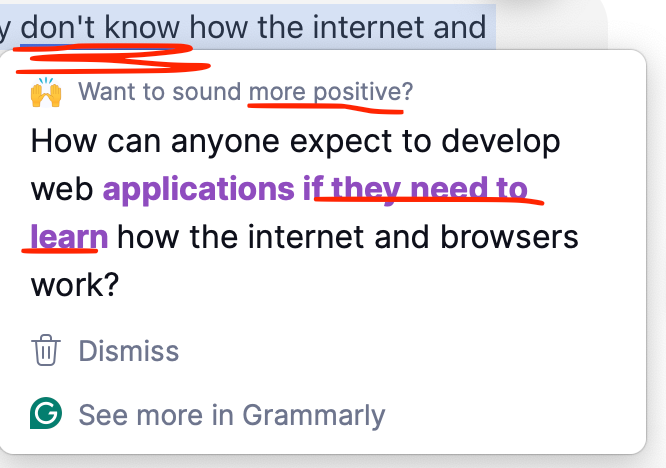

Grammarly

Let’s start with the Grammarly. I keep getting suggestions that sometimes changes the full context of the sentence. I am the one who writes text and decides on the tone and context. The problem, as usual, is with humans, not the machines. If my parents didn’t teach me to be nice and polite, I can assure you that neither will the aspiring idiot who trained the model.

By the way, I am canceling my subscription because of this. If you know a good alternative, please let me know.

CoPilot

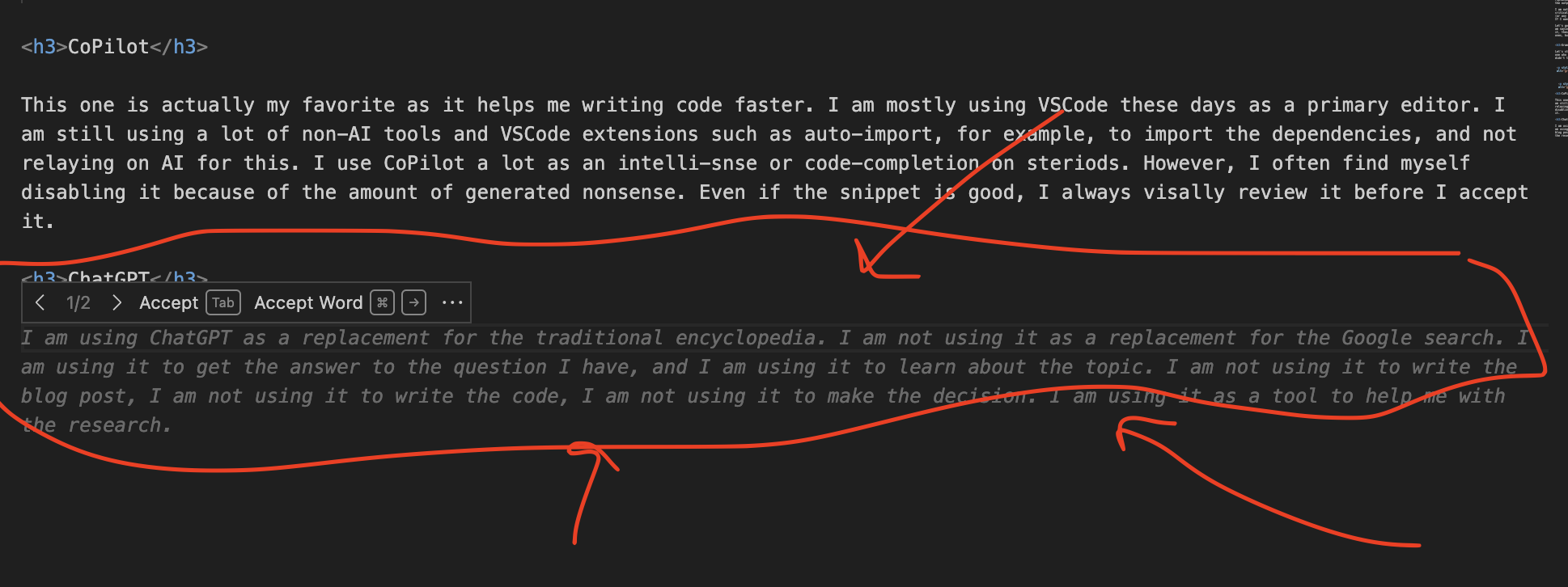

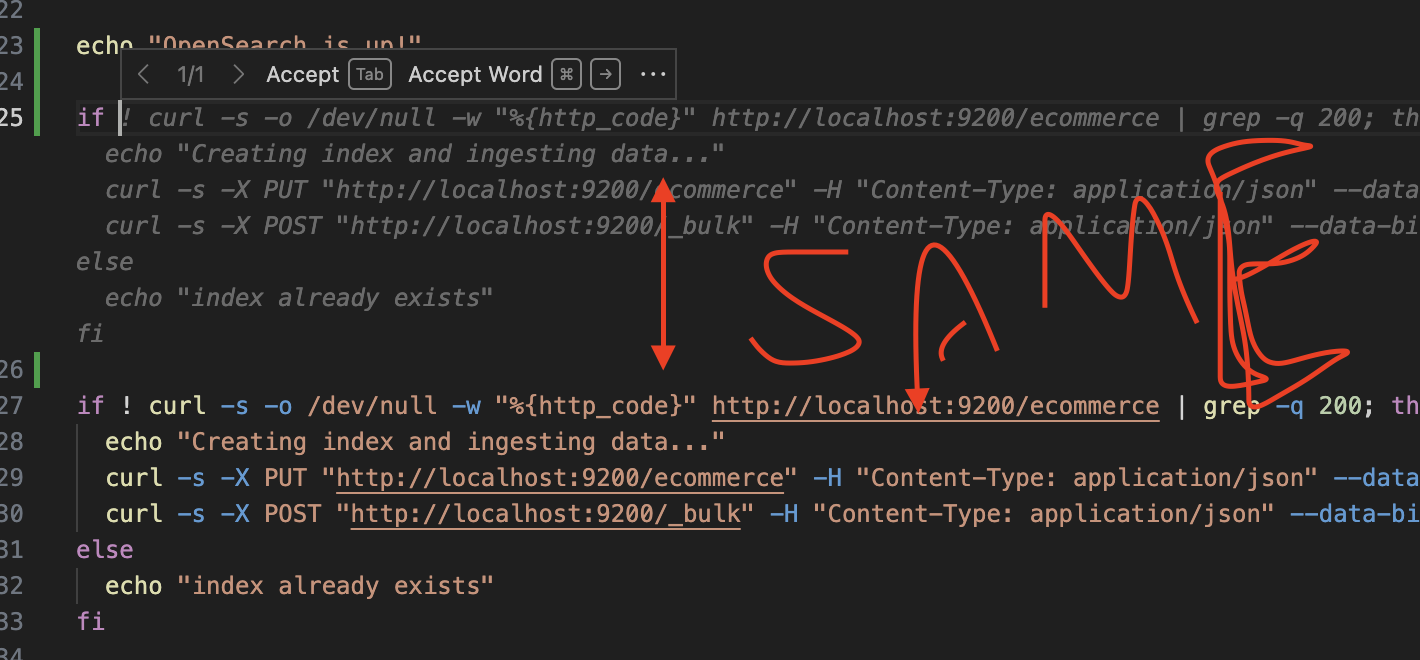

This one is actually my favorite, as it helps me write code faster. I am mostly using VSCode these days as a primary editor. I am still using a lot of non-AI tools and VSCode extensions such as auto-import, for example, to import the dependencies and not rely on AI for this. I use CoPilot a lot as an intelli-sense or code-completion on steroids. However, I often find myself disabling it because of the amount of generated nonsense. Even if the snippet is good, I always visually review it before I accept it… perfect timing, I forgot to turn it off, and here is the result:

ChatGPT

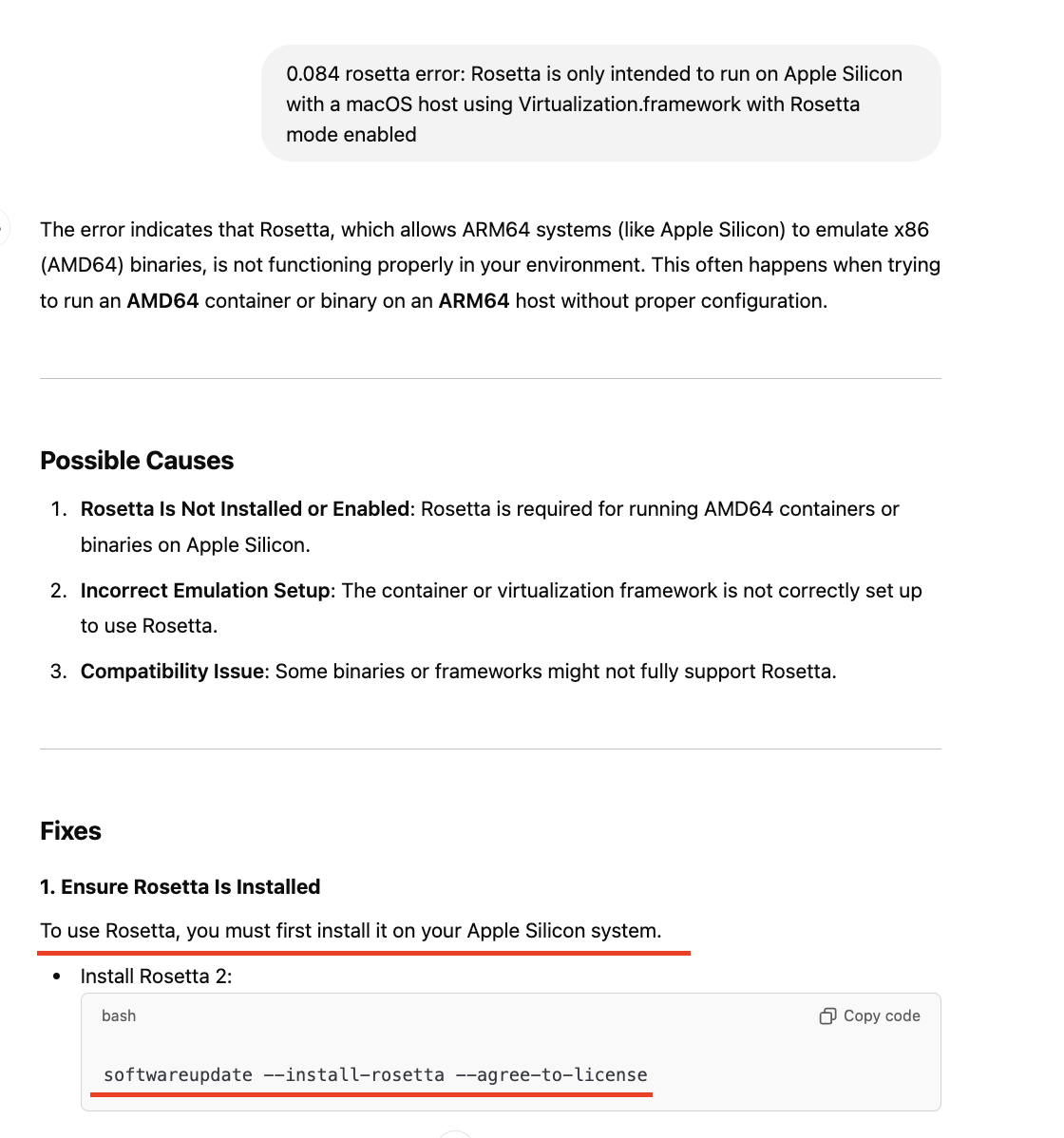

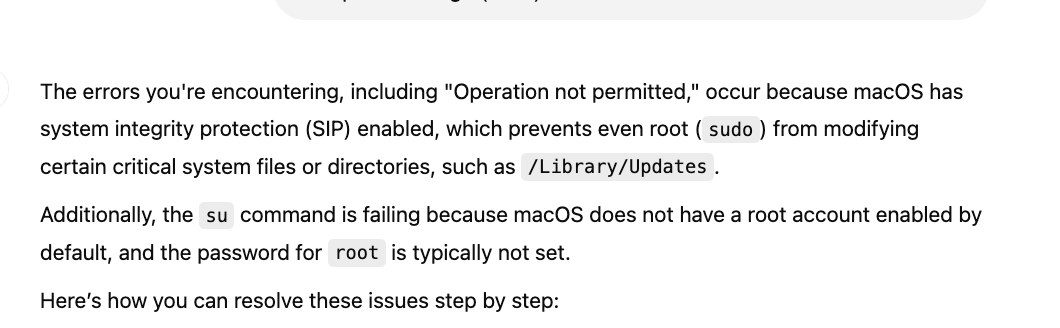

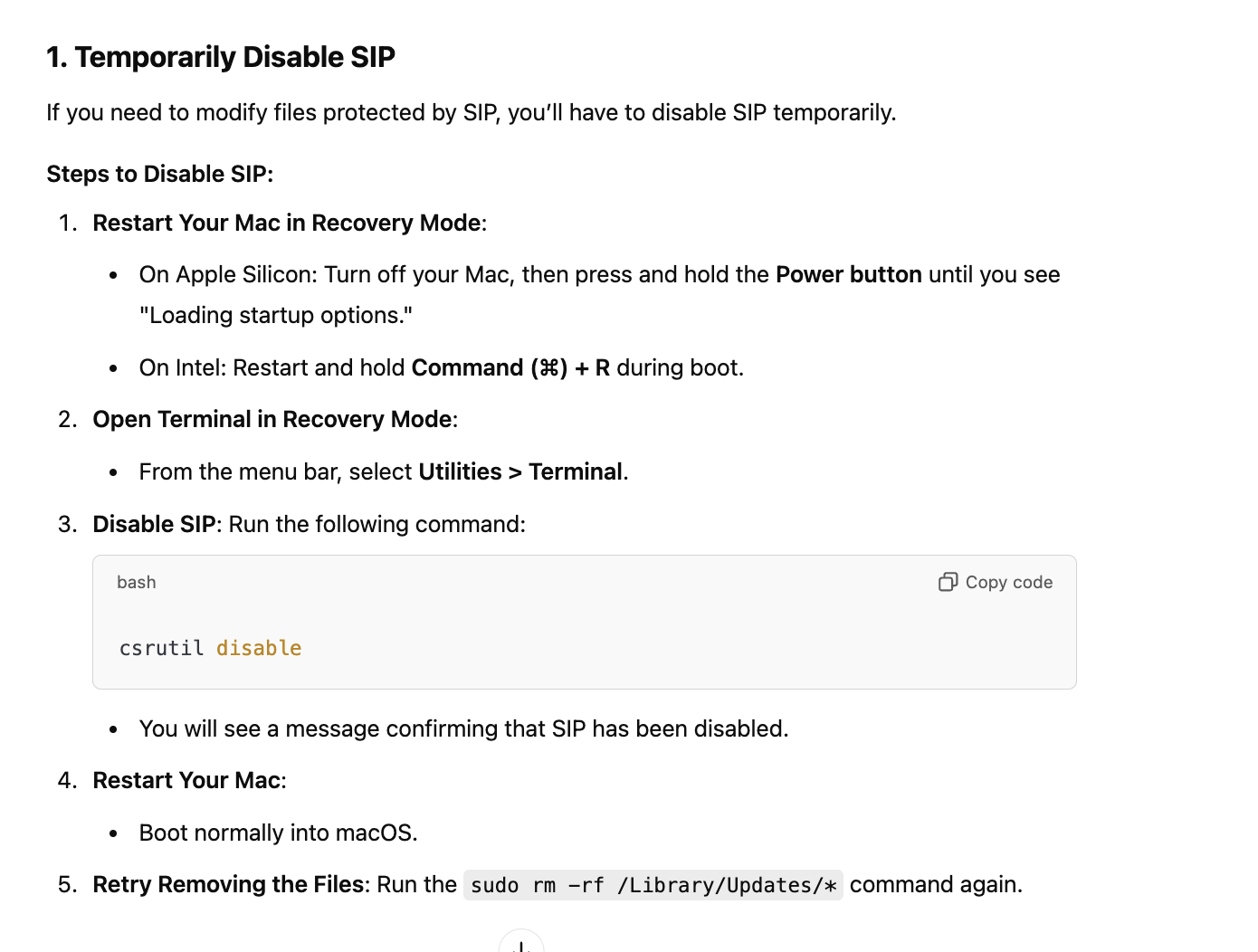

As I said, I tested the ChatGPT many times with some simple and more complex things. Most of the time the answers were ok, sometimes very extensive, while in some cases, the explanation was outadated, or completly wrong. Here is the most recent example of the issue I tried to solve with the ChatGPT. The provided answers were somewhat on track, but the proposed solution was horrible. Of course I rejected it and found the way out on my own. Spoiler alert, the only thing nedded to do was to update Docker Desktop! Now, have a look into the poroposition:

Now the instructions goes into more details:

- Ensure macOS Is Up to Date

- Manually Install Rosetta

- Reset Software Update Cache

- Reinstall macOS (Without Wiping Data)

- Verify Rosetta Installation

And finally, with the sound of fanfare, the ultimate solution proposed by the ChatGPT:

Would you follow this and do what was recommended? The solution was, I repeat, only to update the Docker Desktop. By the way, here is something helpful about installing Rosetta.

Conclusion

That’s all I have for now. For the geniuses reading this as “DO NOT USE AI,” again, NO! That’s not what I am saying! Use AI as a tool where it makes sense, and use it to speed up your production. Do not blindly trust it. These are just a few examples of why not to.

Does that answer your question?