Exposing a C Program via Flask on Aws Fargate

Introduction

What started as an internal nerd joke, which I will not even try to explain, has unexpectedly turned out to be a nice example of cloud architecture. It involves several components, covering a lot of topics related to deploying docker containers on AWS as well as using Flask, which executes an ordinary C program. The purpose of the C program included might be questionable, but that’s not the point. The real takeaway here is that you can have a legacy application running on your server and expose it as a REST API. You can even use this approach to remotely control your server, automate tasks, or configure settings. This setup actually does have a lot of potential use cases.

Prerequisites

- Basic understanding of what is container and the purpose of Docker

- Basic understanding of AWS Fargate

- AWS CLI installed and configured with your credentials

- AWS CDK installed

npm install -g aws-cdk

Talk is cheap, let’s move to the implementation. The full source code that can be deployed on AWS Fargate is available on Github.

Step 1: Executable C program

For the purpose of this demo, we’ll need some executable files. In the repository, you’ll find the source code for a program that allegedly “compresses the word.” It takes the word “Hierarchy,” UTF-8 encoded (1 byte per character), and then confidently creates a 4-bit representation for each character. Does it work? Probably… as long as you don’t use any characters whose position in the alphabet is greater than 15. We need one more bit in the array for all the characters, but who cares? Overly complicated logic is there for reasons that are out of the scope of this article.

Contributing to compress.c

If you still have a problem with this, feel free to submit a PR with a fix. Just make sure it has 100% test coverage and the static analysis shows a cognitive complexity no higher than 1. We have to maintain our high standards, so don't forget to document the byte-shifting logic in the README file (UML diagram included, of course). If you make it more pointless, I will gladly accept the PR.

Step 3: Dockerfile

We are using a docker container to run the application. The Dockerfile in the root contains the configuration to install dependencies, build the C program, and then run the Flask application. We can build and test the container locally by running the following commands:

docker build -t compress-word .

docker run -p 3333:3333 compress-word

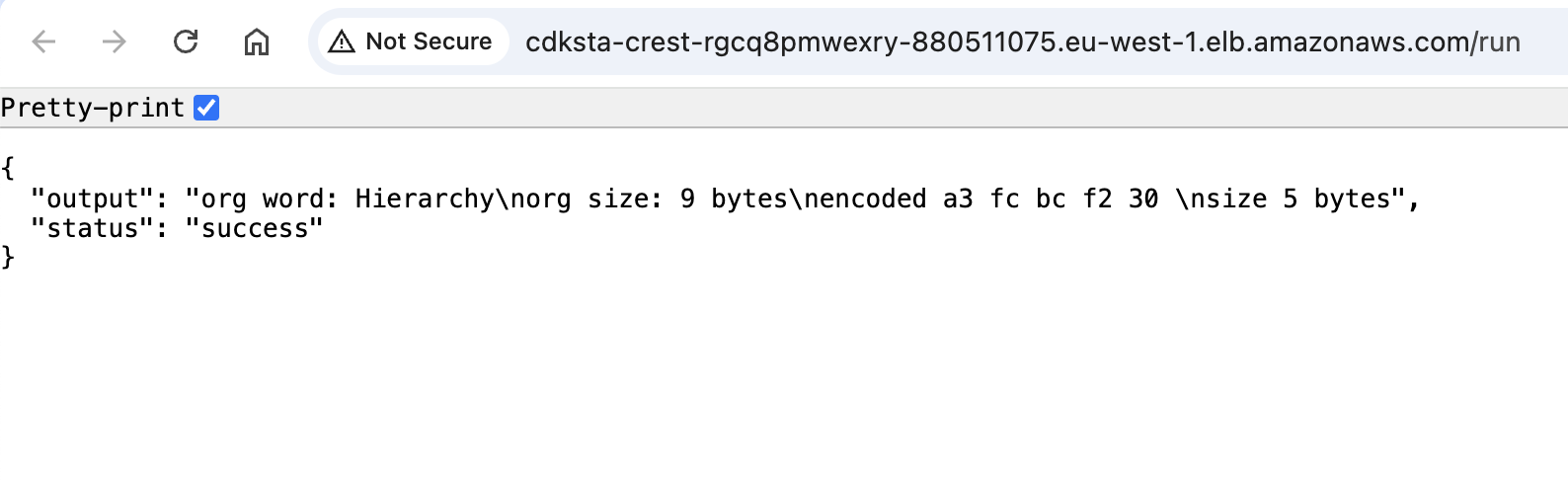

Open your browser, then navigate to http://localhost:3333/run, and you should see the output of the C program.

Step 4: AWS Fargate

This one is tricky but very instructive. If you never had a chance to use Fargate, this is the perfect opportunity to try it. Fargate is, according to AWS, “a serverless compute service.” What this, in practice, means is that you have only minimal infrastructure to manage, which basically comes down to the configuration, so you can focus on the application instead. In order to run a container on Fargate, we need to do a little bit of preparation. For the provisioning of the resources, we will use AWS CDK.

First, let’s take care of the network part of our infrastructure. We need to create a VPC and security groups. We don’t want a NAT gateway, as our service will not access the public internet or network, while we will need to access the Elastic Container Registry (ECR) to pull the container image. For this purpose, we will create an ECR Interface Endpoint. It’s not the happiest solution, but it’s still cheaper than the NAT gateway. However, this is not always the case. Every time you are creating a service, you need to do your math and make sure that you are not overpaying for the service. There is no “one-size-fits-all” solution. Always remember that. You can thank me later. Note that only relevant code snippets are shown here in the text.

Creating VPC with Cidr and no NAT gateway:

const vpc = new ec2.Vpc(this, 'VPC', {

maxAzs: 2,

ipAddresses: ec2.IpAddresses.cidr('192.168.0.0/16'),

natGateways: 0,

});

Because we don’t have a NAT, our service cannot access ECR over the public network. In this case, we need to add the interface endpoint. This allows connectivity from the private network to the AWS services.

vpc.addInterfaceEndpoint('ECREndpoint', {

service: ec2.InterfaceVpcEndpointAwsService.ECR,

});

Remember that our Flask API is running on port 3333, so we need to open this port in the security group. The load balancer must have permission to access the container on this port. because it will receive traffic on port 80 then forward it to the Fargate task on port 3333.

const loadBalancer = new elbv2.ApplicationLoadBalancer(this, 'CRestALB', {

vpc,

internetFacing: true,

securityGroup,

});

Now is the time to create an ECS cluster. Elastic Container Service(ECS) is an AWS service for container orchestration. It is much simpler than Kubernetes (by the way, there is the EKS service for hosting K8 workloads if you want to go that way), but it is probably everything you will ever need. ECS is deeply integrated with the AWS services, which helps a lot. One thing to note (we need to keep K8 gatekeepers happy) is that running Kubernetes gives you more flexibility and control, but that comes with the price of complexity. Chose wisely.

const cluster = new ecs.Cluster(this, 'Cluster', {

vpc,

});

The purpose of the cluster is to run our tasks. The task is an isolated container instance running on ECS. It has isolated infrastructure from other tasks, meaning that memory and CPU are not shared between tasks. To define a task, we need to define service, service role, task and execution role, and CPU and memory requirements. What is important here is to give the task permission to access the ECR repository so it can pull the image from it. For the execution role we can use the one provided by the AWS. When you are customizing the role, make sure to follow the least privilege principle.

const taskRole = new iam.Role(this, 'CRestTaskRole', {

assumedBy: new iam.ServicePrincipal('ecs-tasks.amazonaws.com'),

});

(taskRole as iam.Role).addToPolicy(new iam.PolicyStatement({

actions: [

'ecr:GetAuthorizationToken',

'ecr:BatchCheckLayerAvailability',

'ecr:GetDownloadUrlForLayer',

'ecr:DescribeRepositories',

'ecr:ListImages',

'ecr:BatchGetImage',

],

resources: [`arn:aws:ecr:${this.region}:${this.account}:repository/c-rest`],

}));

const executionRole = new iam.Role(this, 'CRestExecutionRole', {

assumedBy: new iam.ServicePrincipal('ecs-tasks.amazonaws.com'),

managedPolicies: [

iam.ManagedPolicy.fromAwsManagedPolicyName('service-role/AmazonECSTaskExecutionRolePolicy'),

],

});

The task definition itself contains very few spectacular things. We need to define memory and CPU limits and assign roles we previously created.

const taskDefinition = new ecs.FargateTaskDefinition(this, 'CRestTaskDefinition', {

memoryLimitMiB: 512,

cpu: 256,

taskRole: taskRole,

executionRole: executionRole,

});

Finally, we add a container image to the task definition. Besides the container, we are customizing log settings and mapping the container port to Fargate. Notice how environment variables are used everywhere so the stack is not tied to a single account or region.

taskDefinition.addContainer('CApiContainer', {

image: ecs.ContainerImage.fromRegistry(`${this.account}.dkr.ecr.${this.region}.amazonaws.com/c-rest:latest`),

containerName: 'CApi',

environment: {

ALB_DNS_NAME: loadBalancer.loadBalancerDnsName,

},

logging: ecs.LogDrivers.awsLogs({

streamPrefix: 'CApiLogs',

logGroup: new logs.LogGroup(this, 'CApiLogGroup', {

logGroupName: '/ecs/c-rest-api',

retention: logs.RetentionDays.ONE_DAY,

removalPolicy: cdk.RemovalPolicy.DESTROY,

}),

}),

portMappings: [

{ containerPort: 3333, protocol: ecs.Protocol.TCP },

],

});

If you read this far, congratulations, we reached the final step before the deployment. We will now add service and set up a load balancer to forward the traffic to the Fargate task. The code itself is self-explanatory if you only read attribute names.

const service = new ecs.FargateService(this, 'CApiFargateService', {

cluster,

taskDefinition,

securityGroups: [securityGroup],

desiredCount: 1,

assignPublicIp: true,

});

loadBalancer.addListener('CRestCApiListener', {

port: 80,

protocol: elbv2.ApplicationProtocol.HTTP,

open: true,

}).addTargets('CRestTarget', {

port: 3333,

protocol: elbv2.ApplicationProtocol.HTTP,

targets: [

service

],

healthCheck: {

path: '/',

interval: cdk.Duration.seconds(30),

},

});

Chef’s kiss, export the DNS name of the load balancer so you can access the service.

new cdk.CfnOutput(this, 'RunCProgramEndpoint', {

value: `http://${loadBalancer.loadBalancerDnsName}/run`,

});

Step 5: Deployment

To deploy the stack, you should probably consider setting up a CI/CD workflow. However, this is just a demo, so we will use CLI to do it.

npm run build

cdk bootstrap

cdk deploy

Conclusion

There you have it, that’s all I have for you today. I strongly encourage you to deploy and try the app. It can’t be easier than this. The link to your service will be printed on the terminal. Visit the link, and you will see this fancy result in your browser:

The example is simple, but as I said at the beginning, very instructive. If you carefully go through all of the components, you will notice that we touched on a lot of interesting topics. We have a little bit of containers, ECS, ECR, Fargate tasks, Flask API, IAM roles, networking, security groups setting, and even log retention. This also gives an answer to the question, what is the benefit of the managed service? Imagine that you need to set up all of this on your own. We are taking many things for granted without even thingking about them. It’s almost boring, and boring in IT is good.

Instead of goodbye, DON’T FORGET TO CLEAN UP THE RESOURCES if you deployed the stack.

cdk destroy